Reimagined

architecture

built for speed

architecture

built for speed

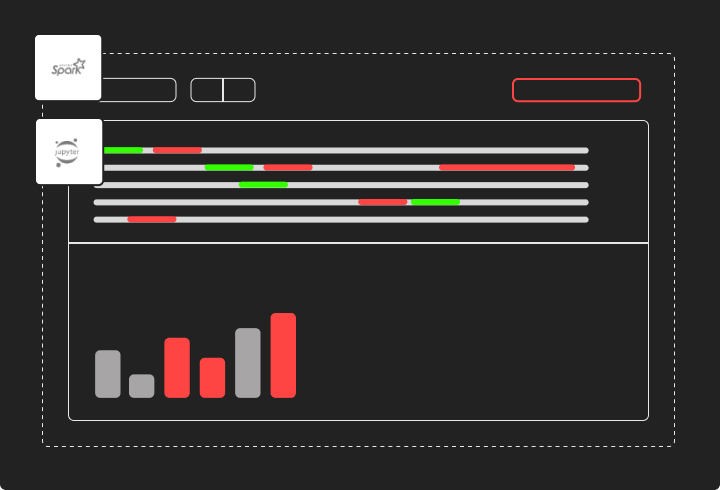

A familiar jupyter notebook with a streamlined interface and superior

functionality built specifically for Apache Spark

transforms your user's experience

pyspark notebooks

High performance, hassle free ML/AI platform

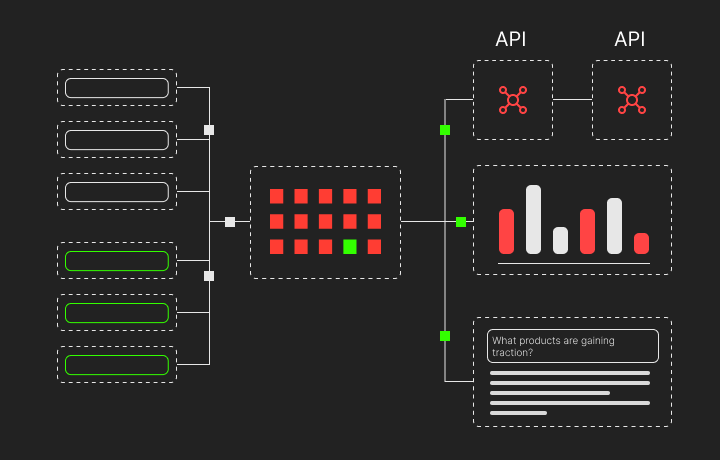

Our game changer is our special-purpose cloud optimized for Spark compute with

smart workload distribution across CPUs and GPUs. That architecture lets us scale to a

higher number of cores driving performance up and costs down.

We know from direct experience that users are reluctant to switch if it requires a

new UI so we present superior functionality in a familiar Jupyter notebook.

As legacy cloud computing hits the customer with two unpredictable costs: the usage

charge and a platform fee. Baltoro users have only one cost - a low fixed monthly subscription.

Leverage a purpose-built

platform

platform

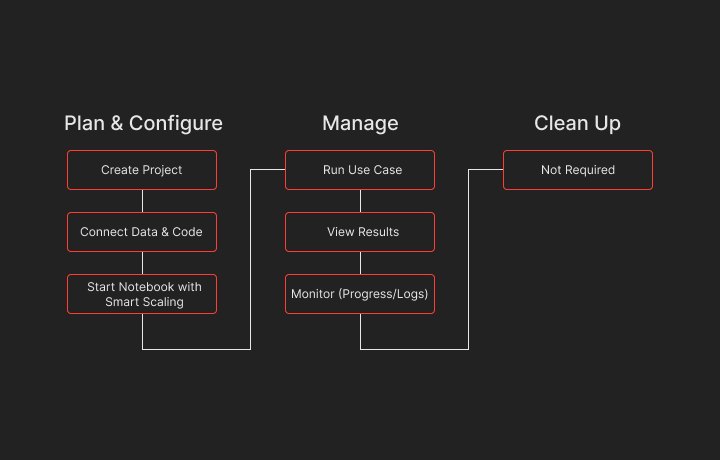

Erase the burden of scaling, provisioning, core selection, GPUs, memory, storage

and installing software. Baltoro's serverless compute

platform frees you to focus on your job.

No need to migrate

data

data

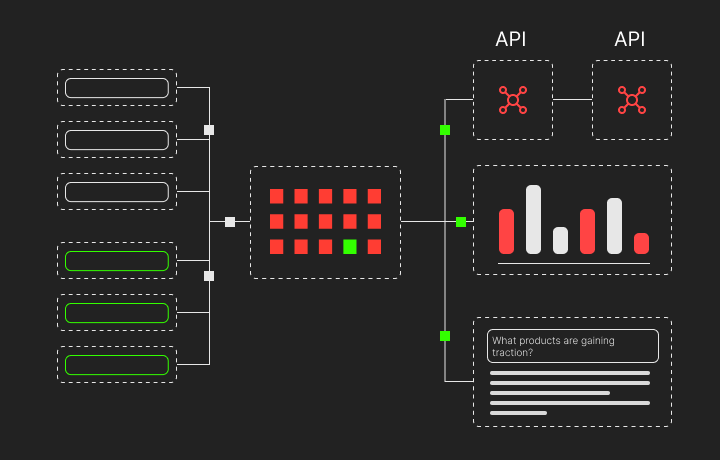

We seamlessly integrate with AWS/Azure and GCP. All data

stays with the current provider so there is no

need to migrate data. This makes it easier to manage your data

and access it whenever you need it. Apart from data we also integrate to your

code repository like Github, BitBucket and GitLab.

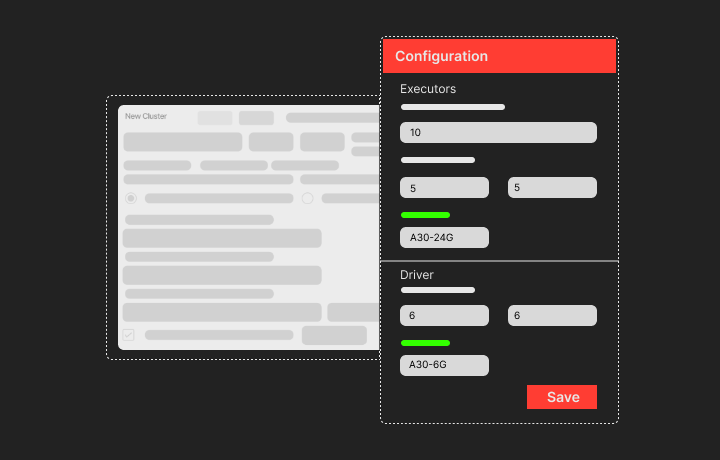

Simplified cluster

configuration and

fast launch times

configuration and

fast launch times

We eliminate the hassles of slow launch times and time-consuming system configuration. With

dedicated resource allocation per notebook and built-in cloud connectors we have simplified the

experience compared to other providers.

Fast

Functional

Easy-to-use

Functional

Easy-to-use

Baltoro represents the rich new functionality

built specifically for Apache Spark that runs close to the bare metal

where features like parallelized loads on GPUs with 100% utilization,

real-time workload optimization including autoscaling compute are part

of the platform.

Hassle-free cluster configurations

Reusable custom environments

Single tick to view runtime logs

Dedicated resource allocation per notebook

Built-in multi-cloud connectors

Job scheduling and workflows

REST API (including inferencing API)

User-friendly notebooks

Notebook revision history and repository integration

Ready to get started?

Train, maintain & run GPT models on your private/enterprise data